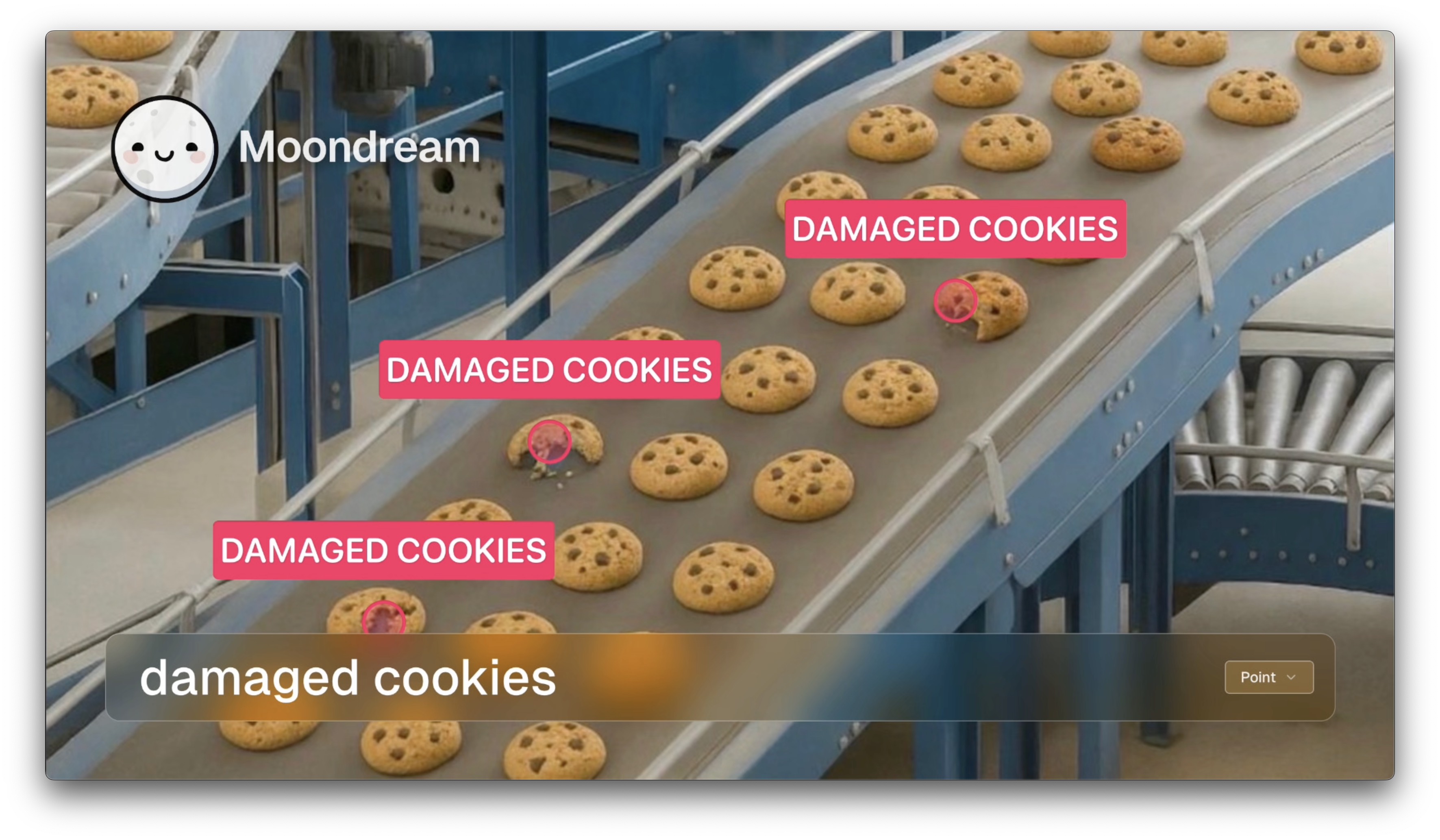

Frontier vision AI, engineered for scale

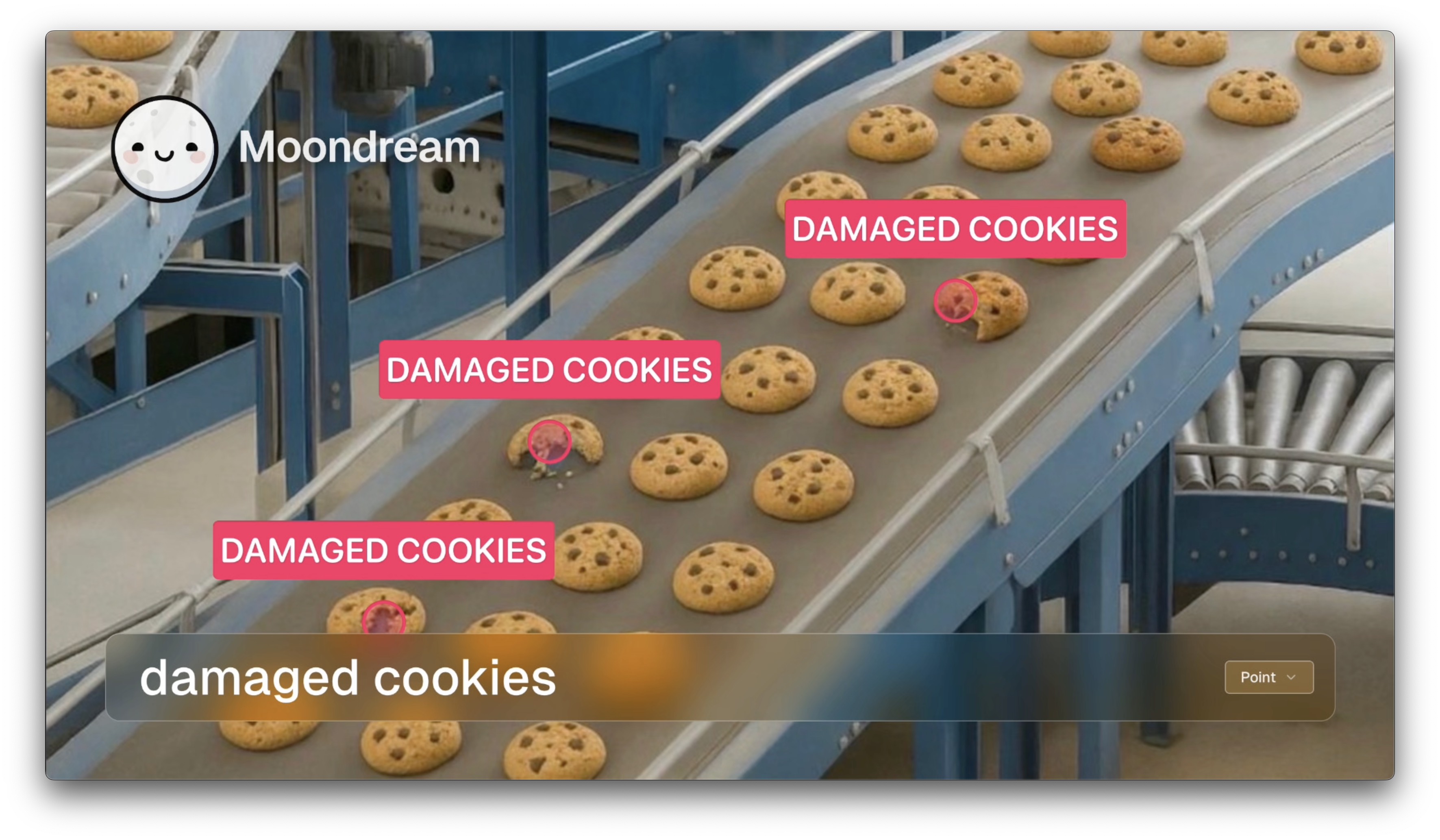

State-of-the-art visual understanding at speeds that make continuous processing possible. Point, detect, count, and reason—without compromise.

Build Amazing Vision AI Products.

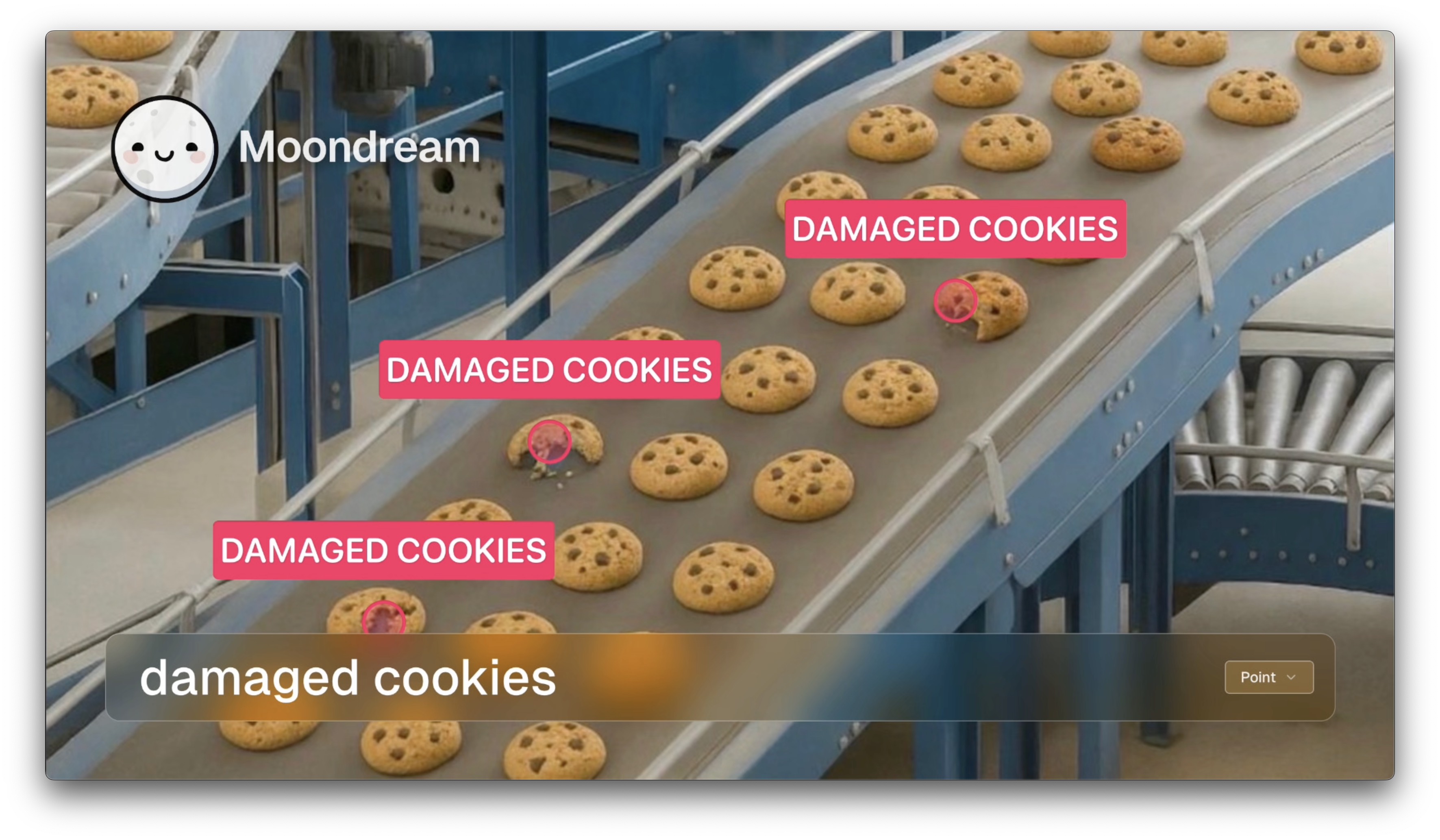

From robotics to enterprise automation, Moondream powers the next generation of intelligent systems

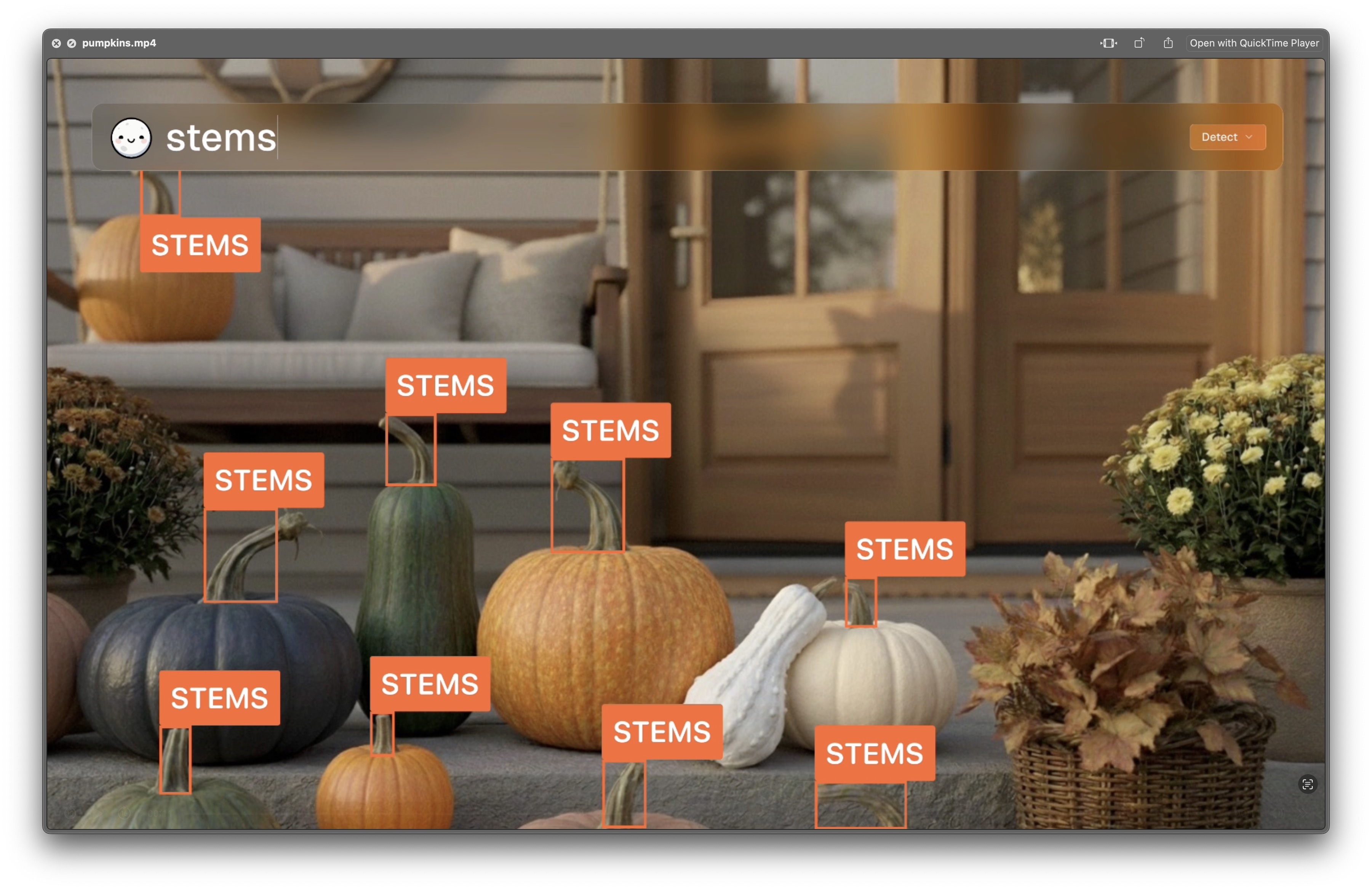

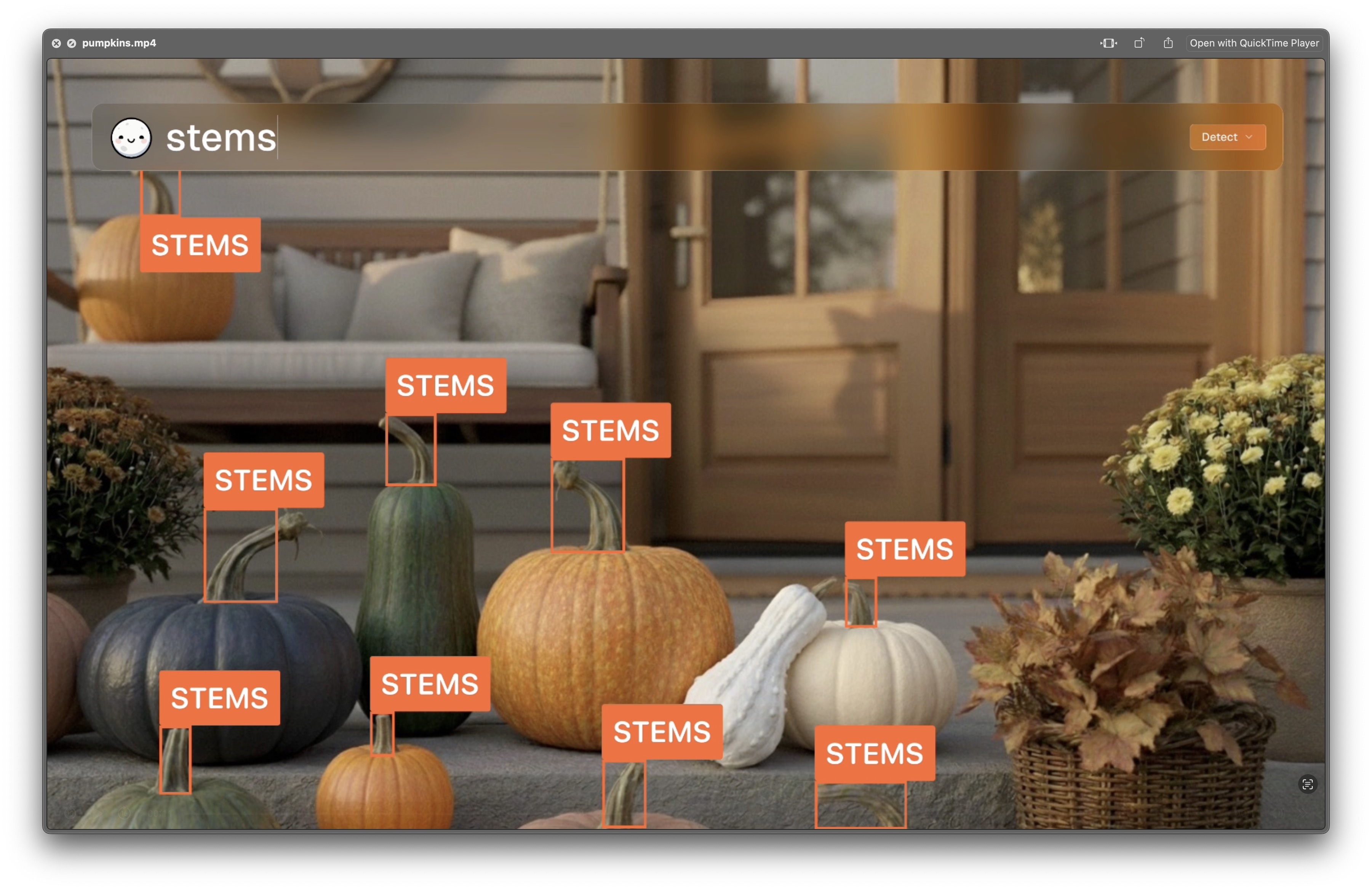

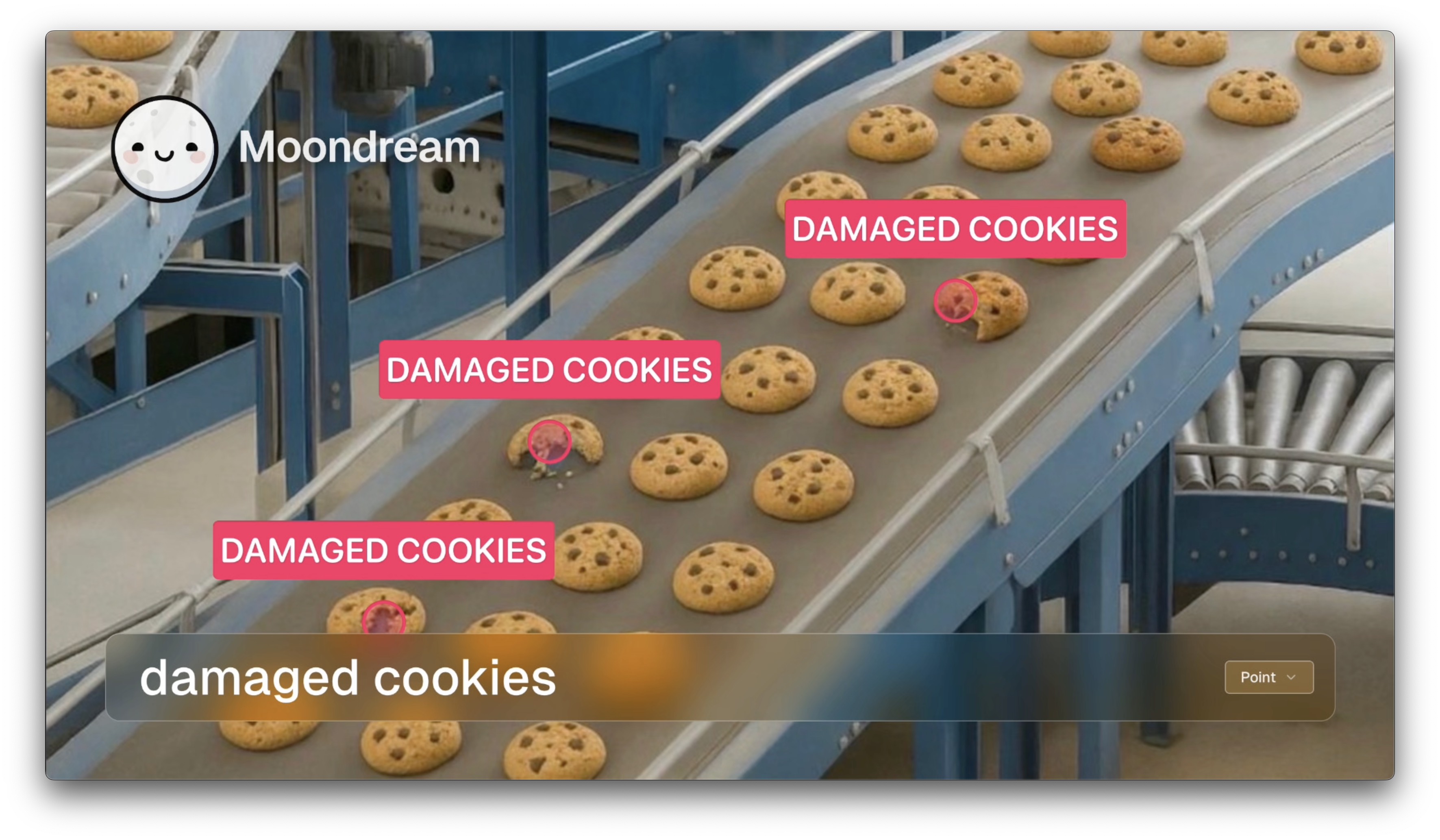

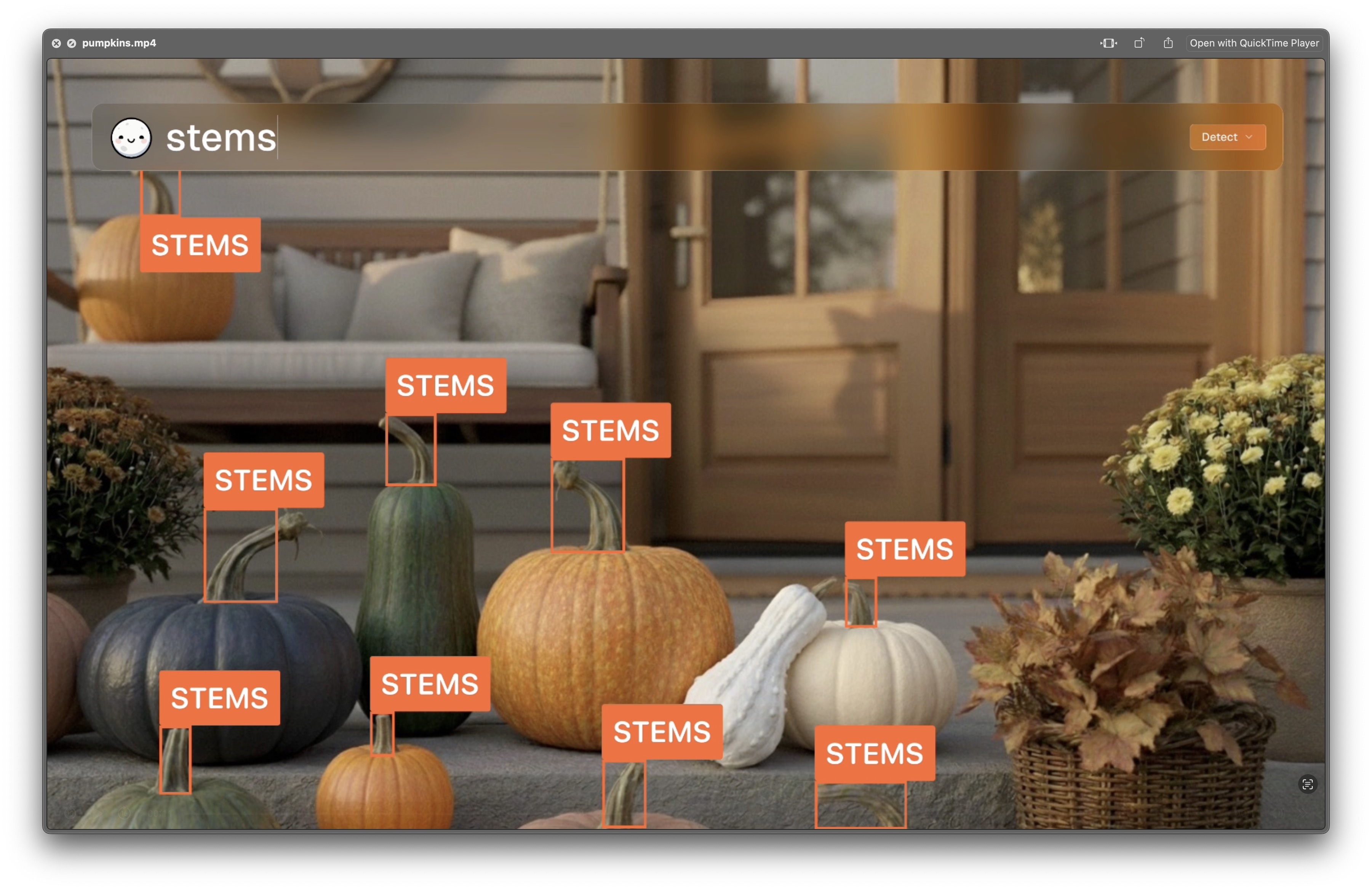

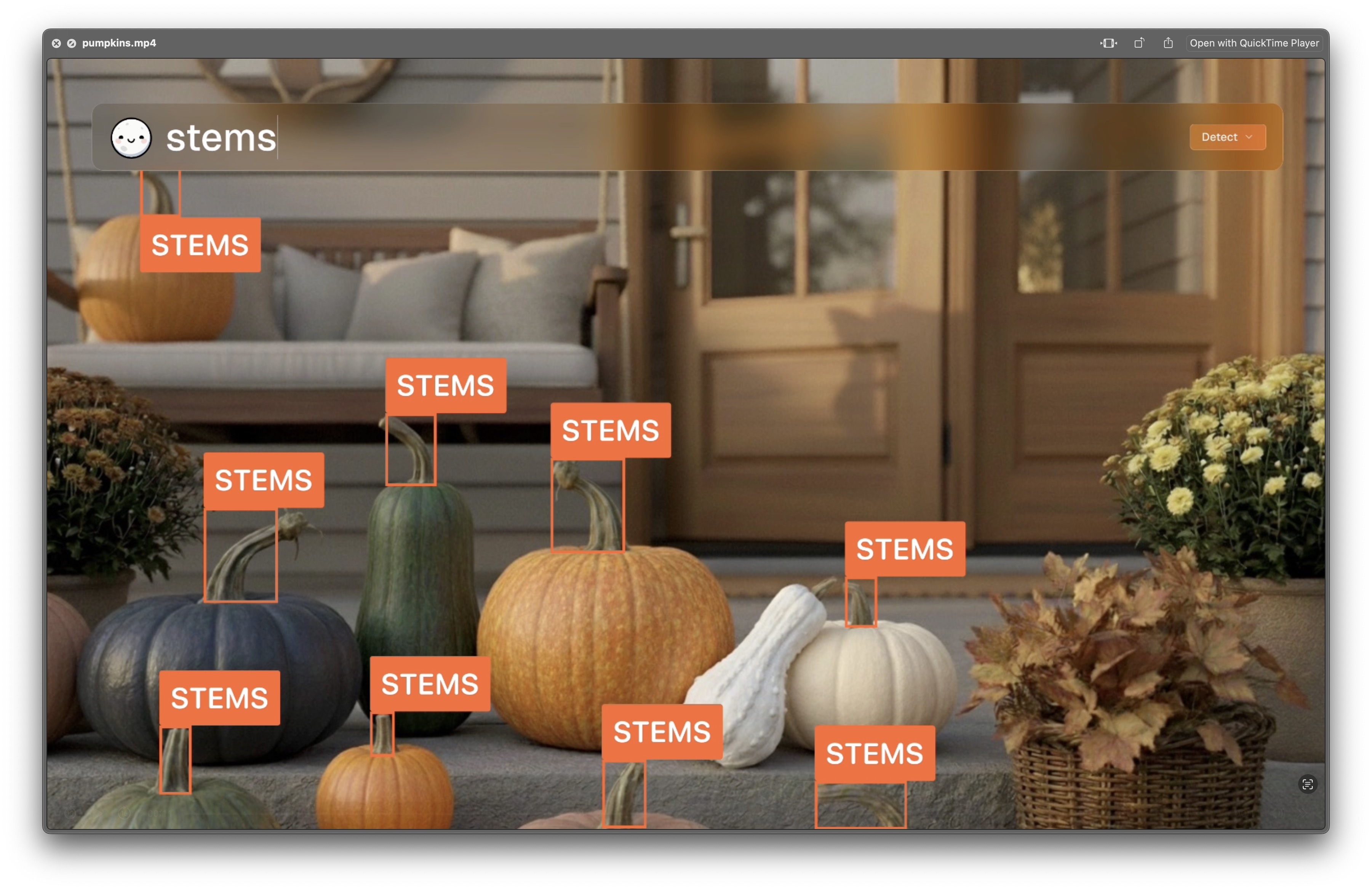

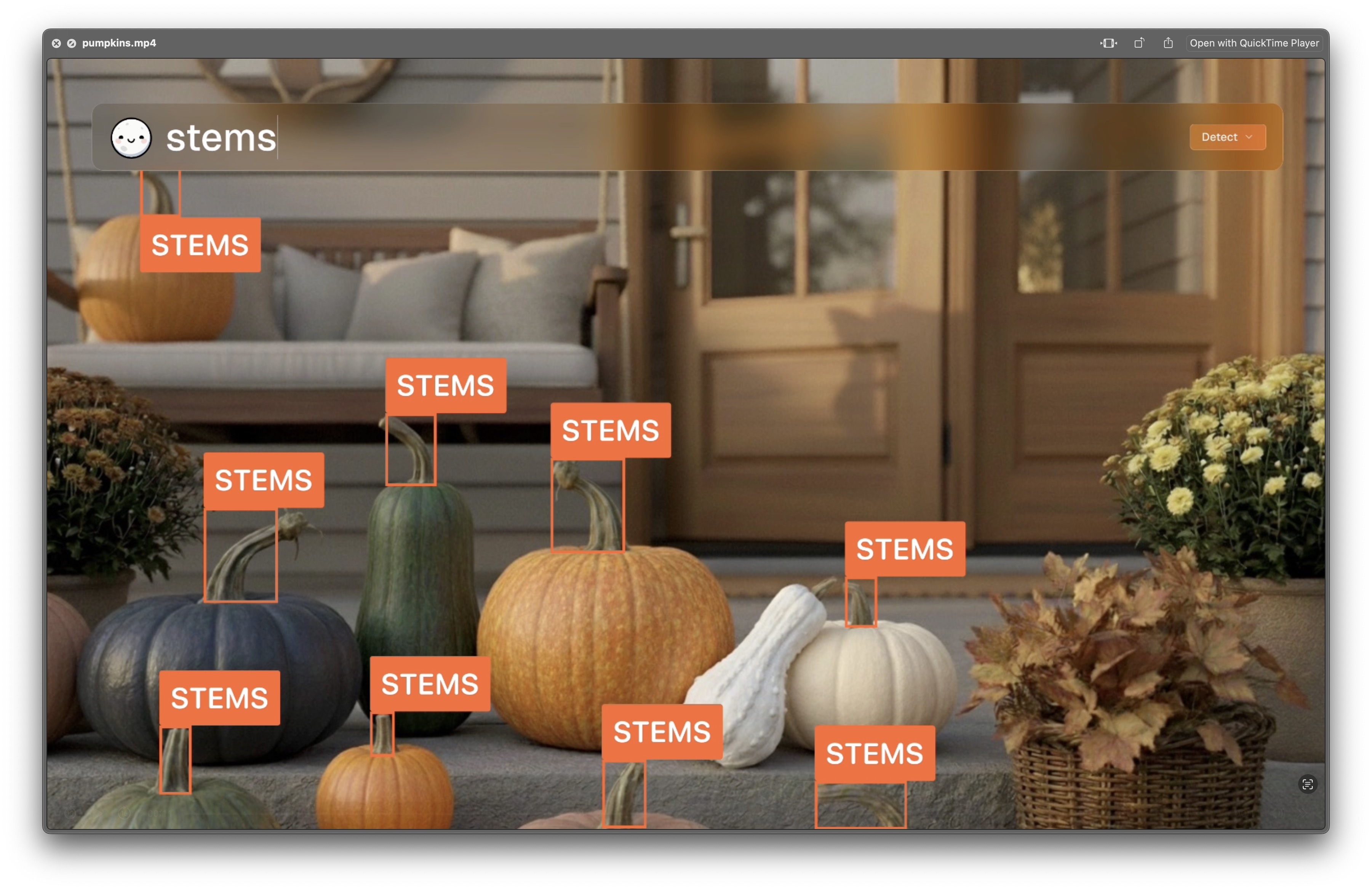

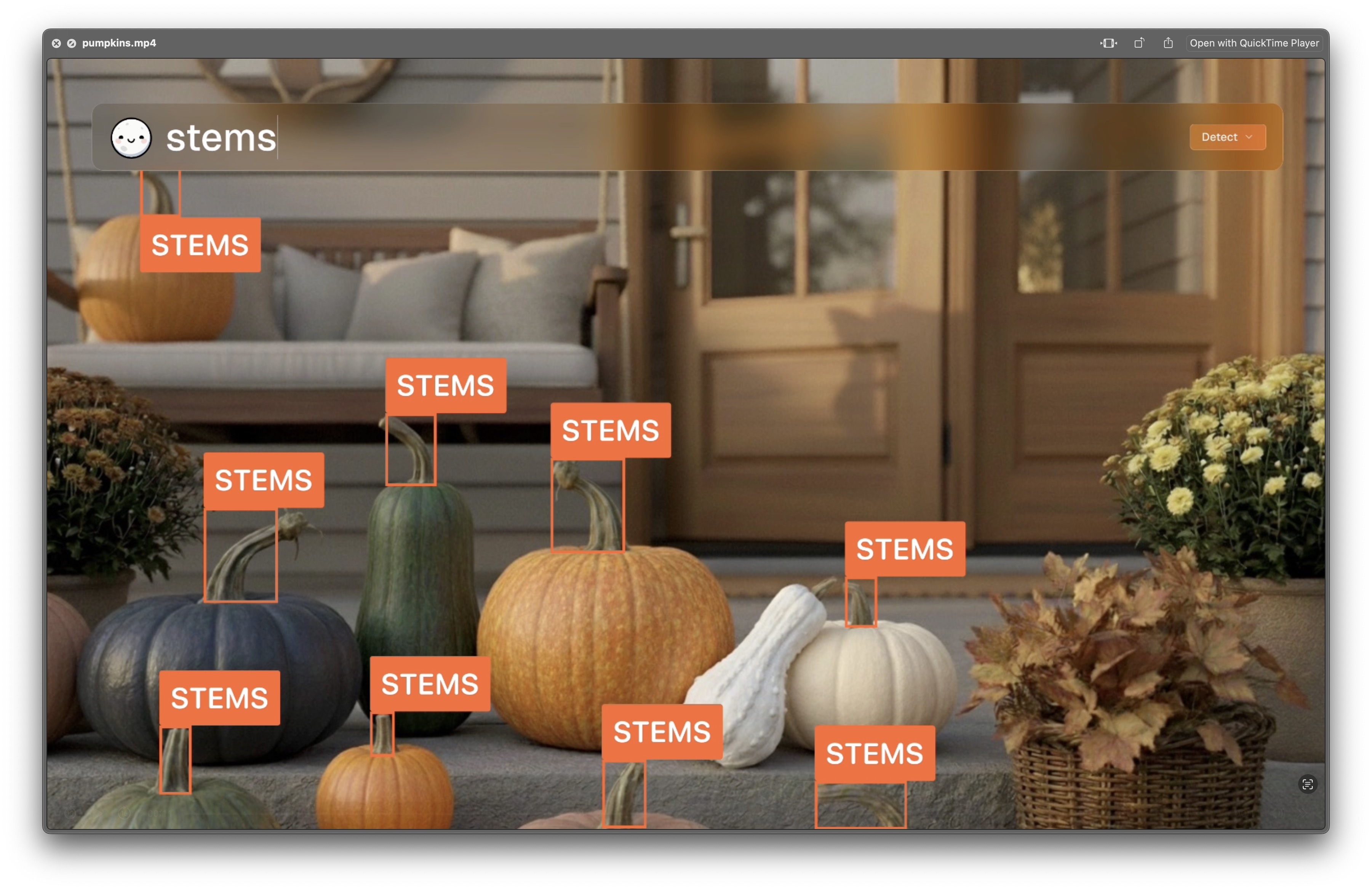

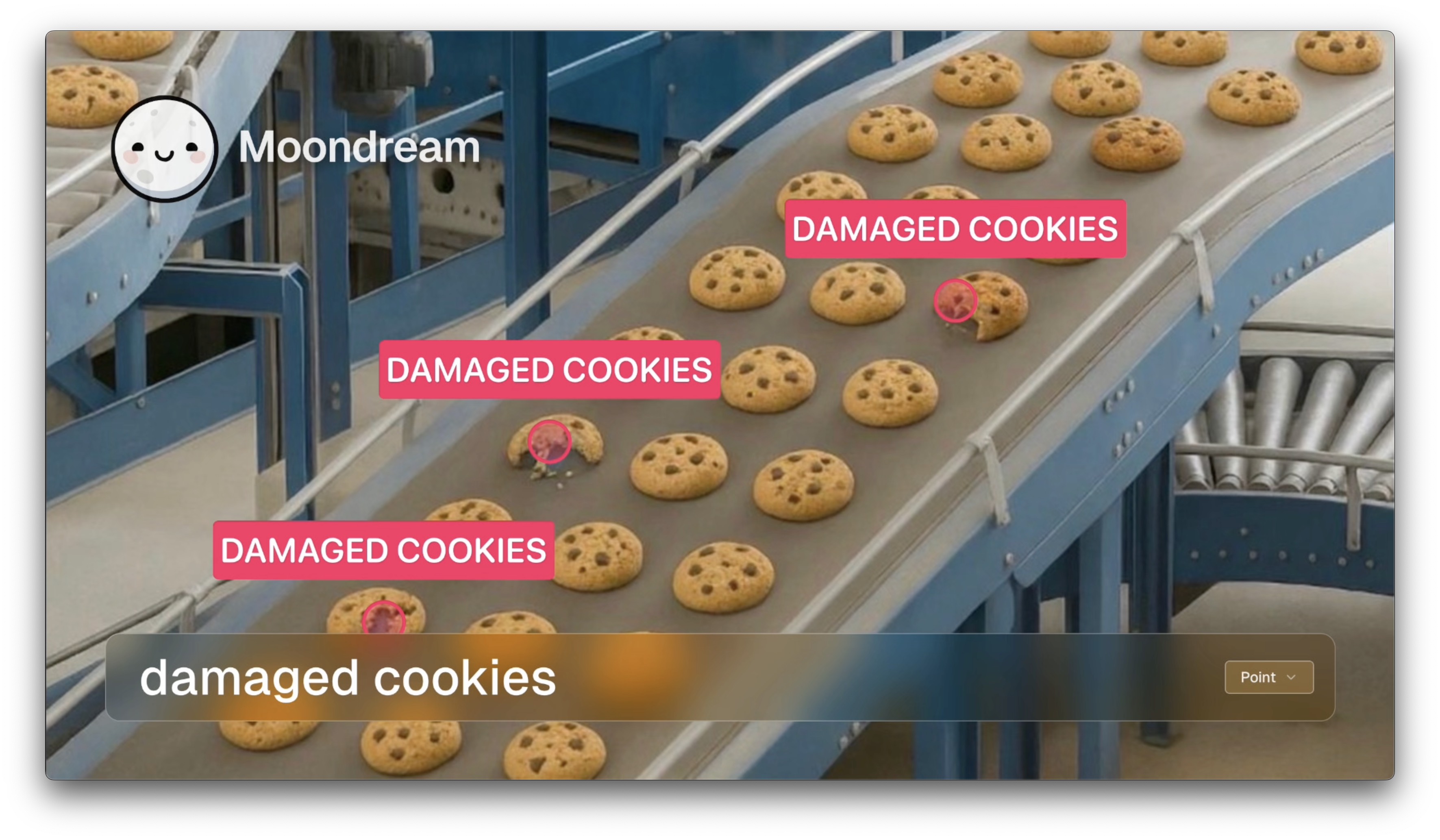

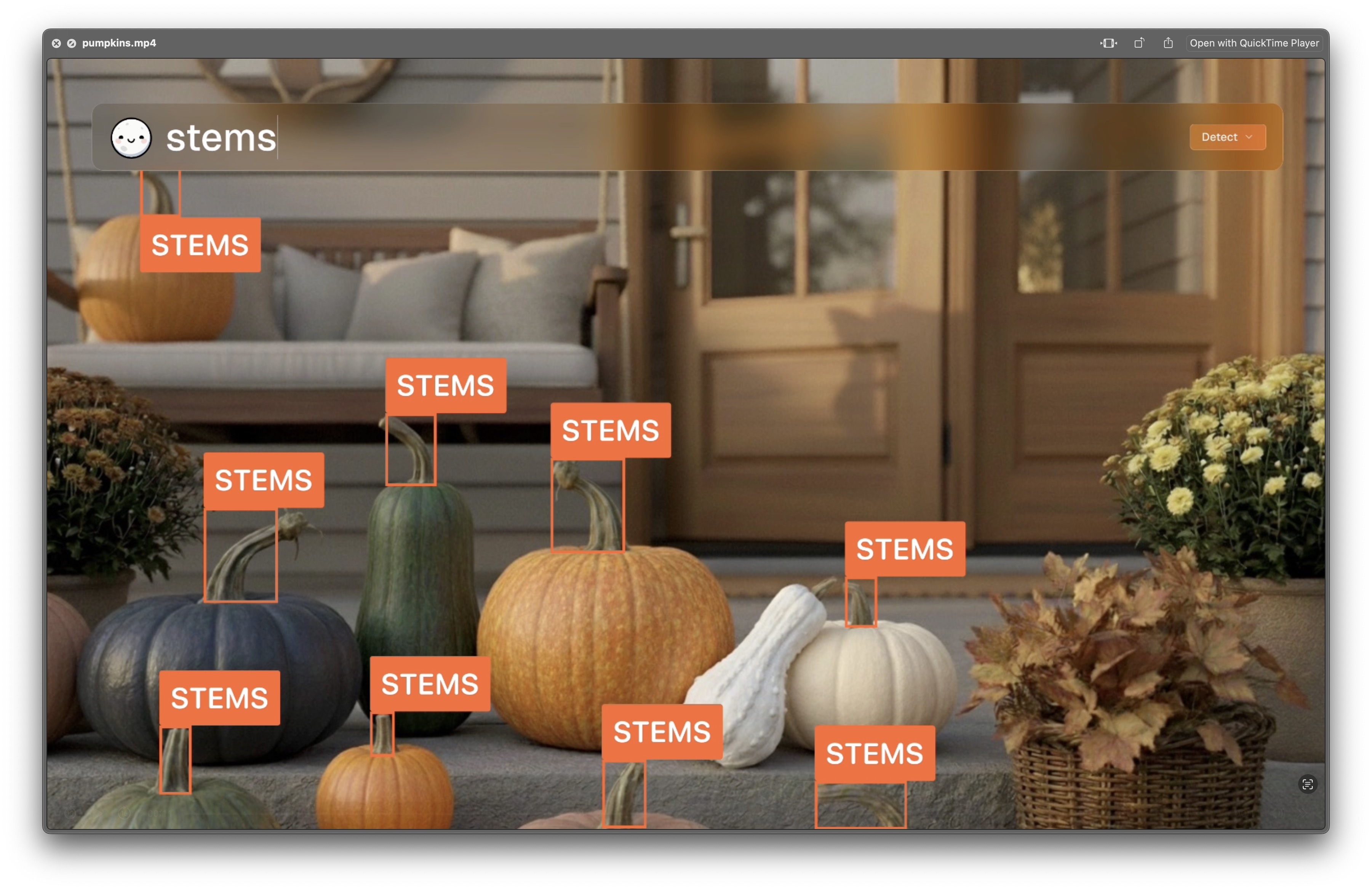

Generate Media Tags

Make every image and video searchable. Automatically generate tags, extract metadata, and find exactly what you need across millions of files, no manual labeling required.

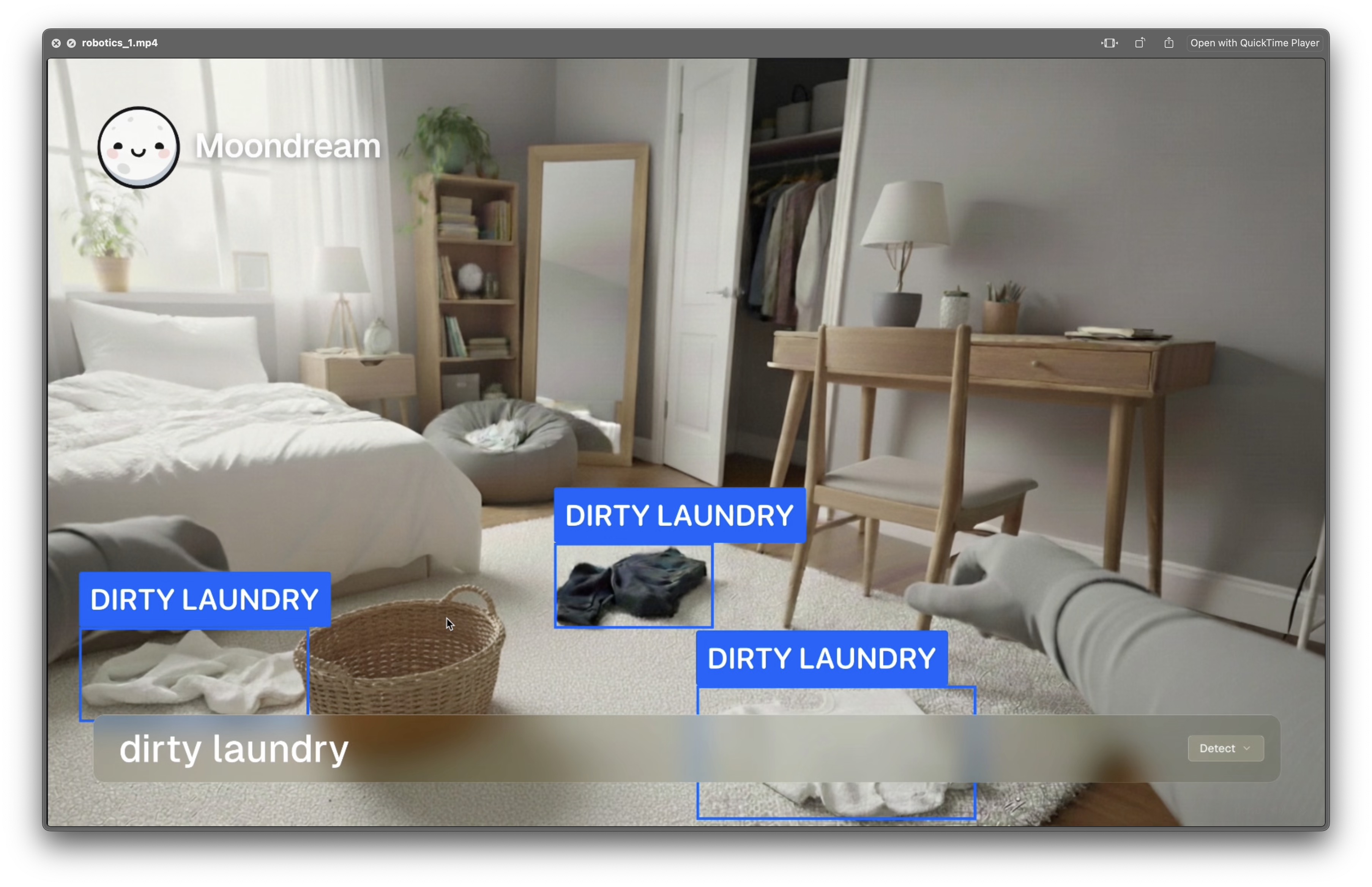

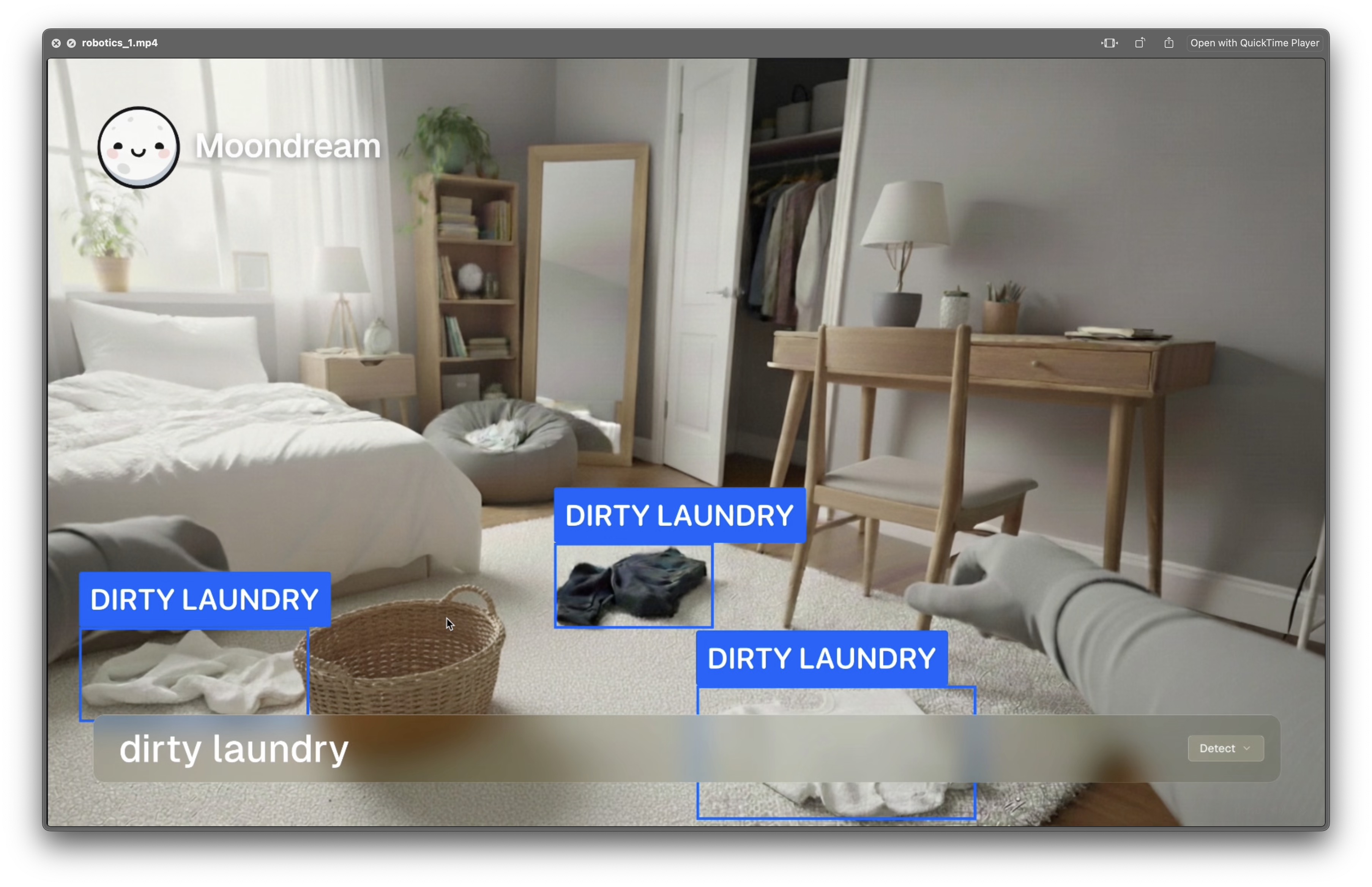

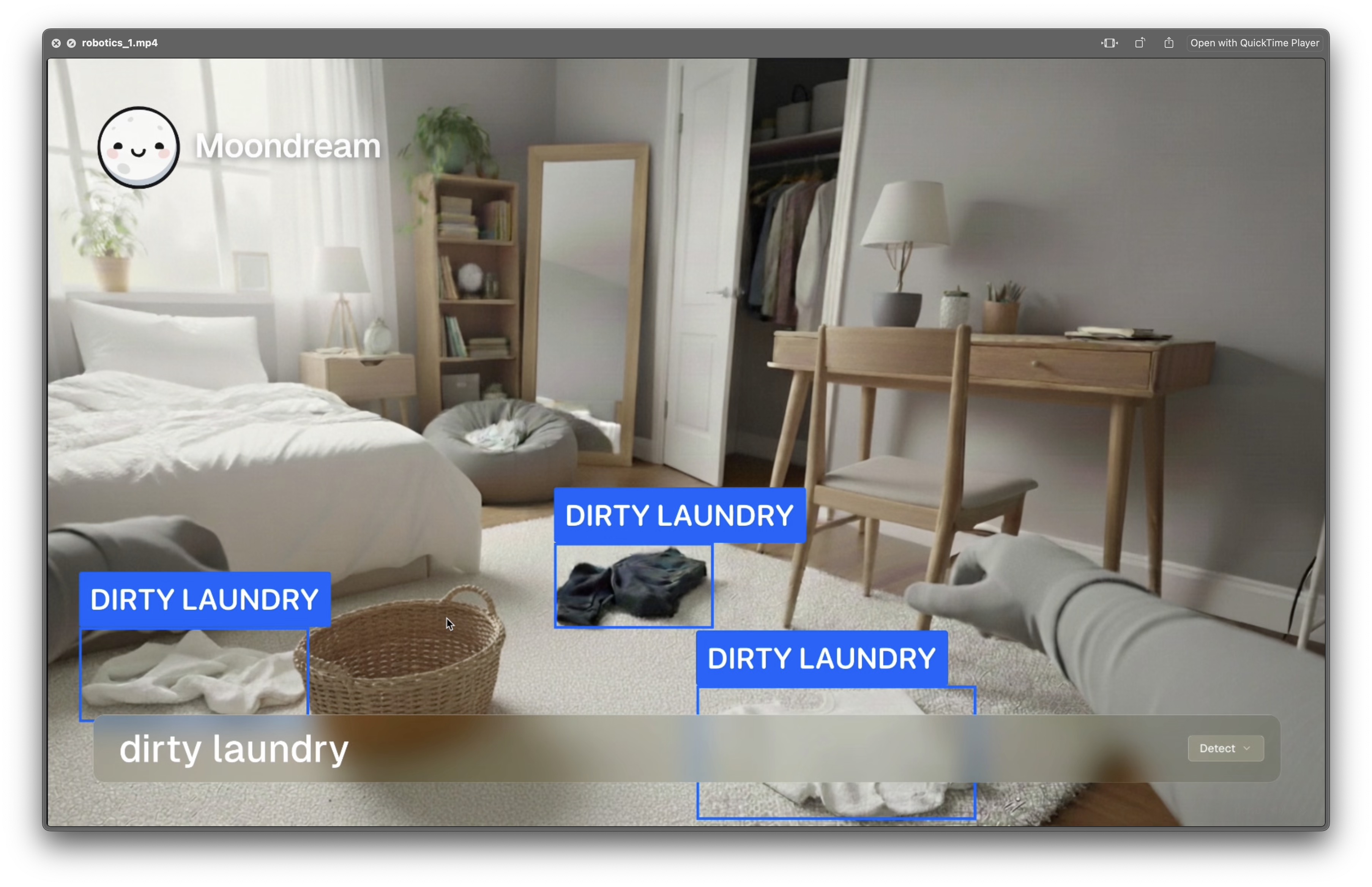

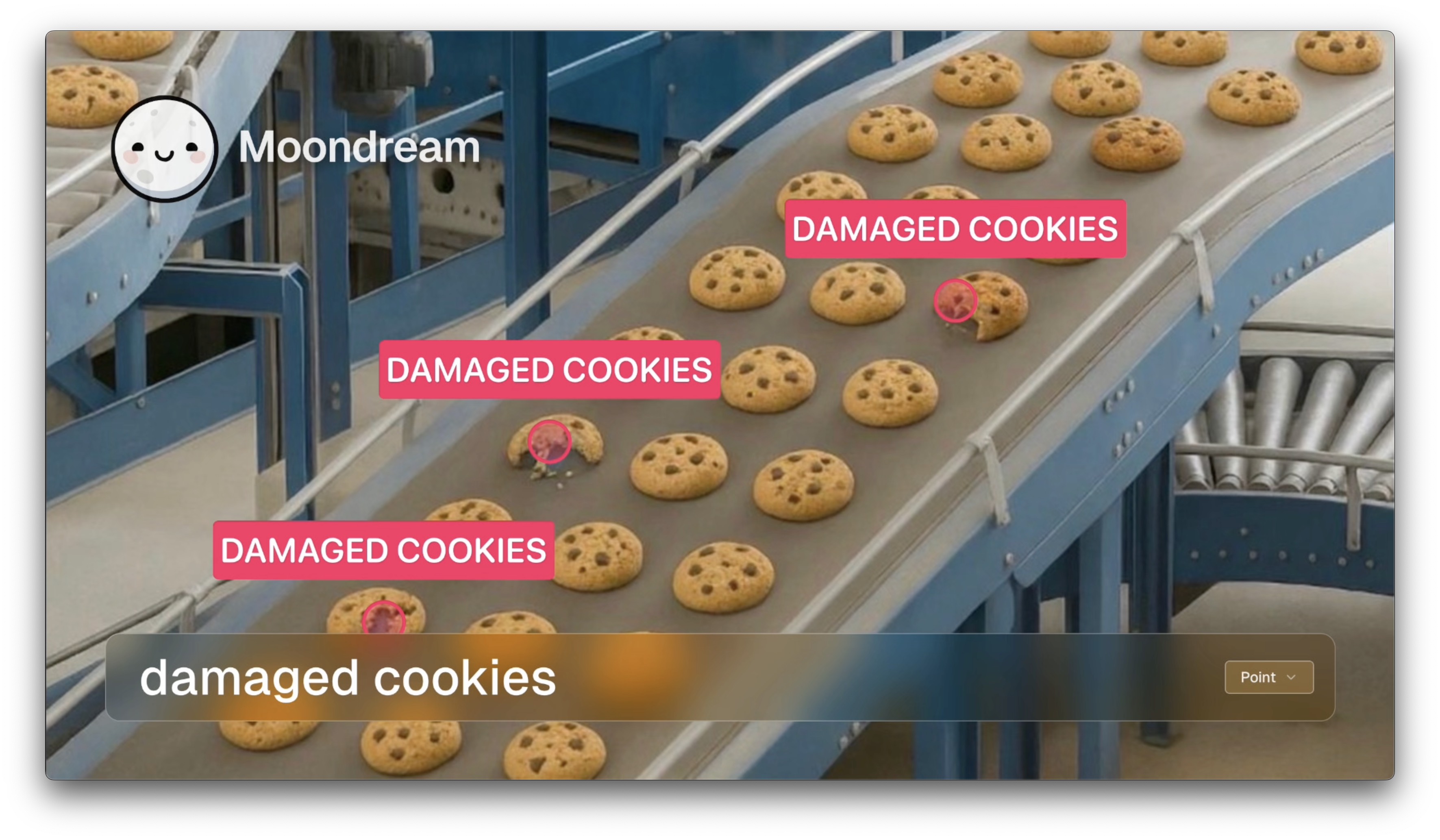

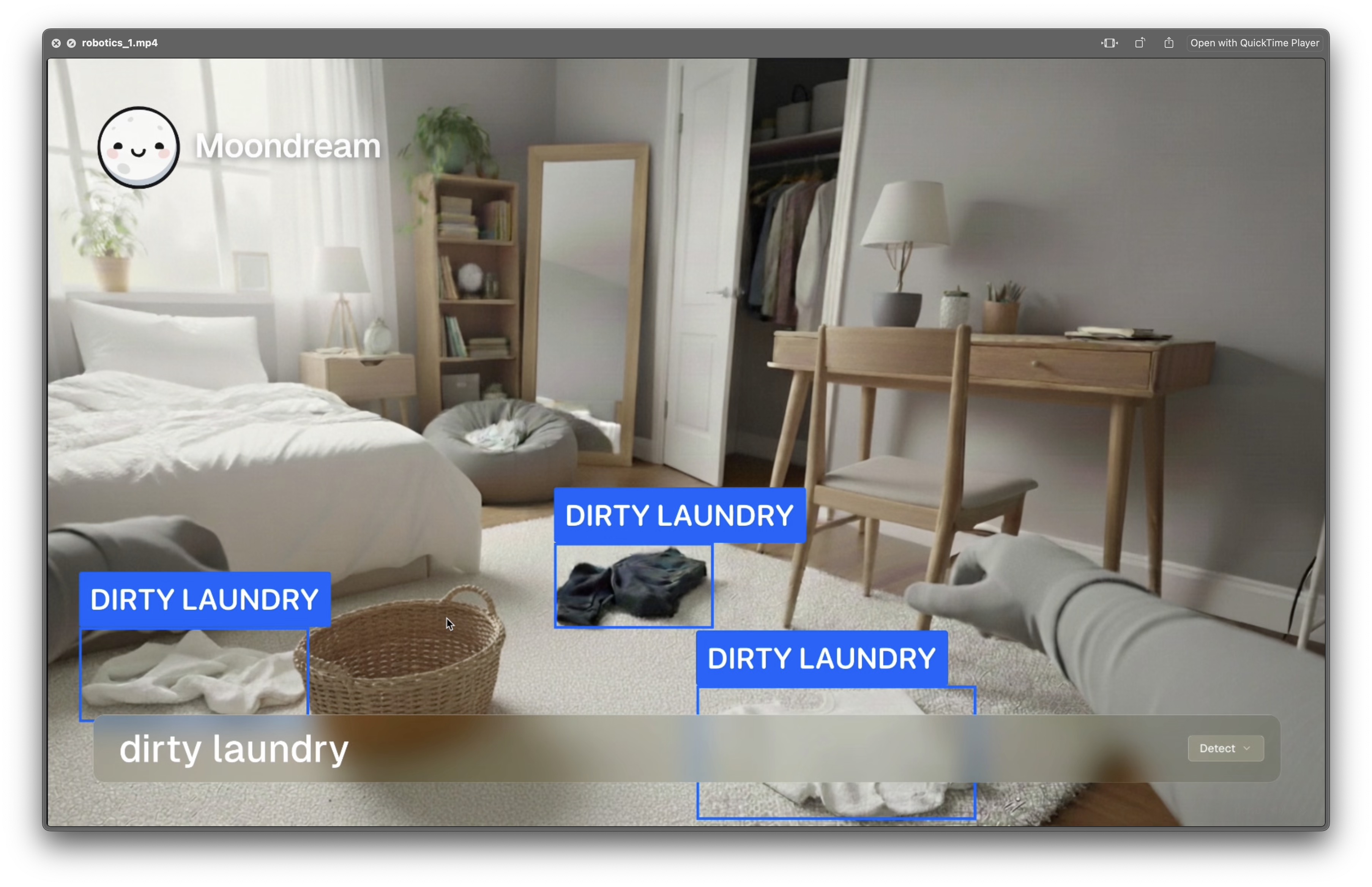

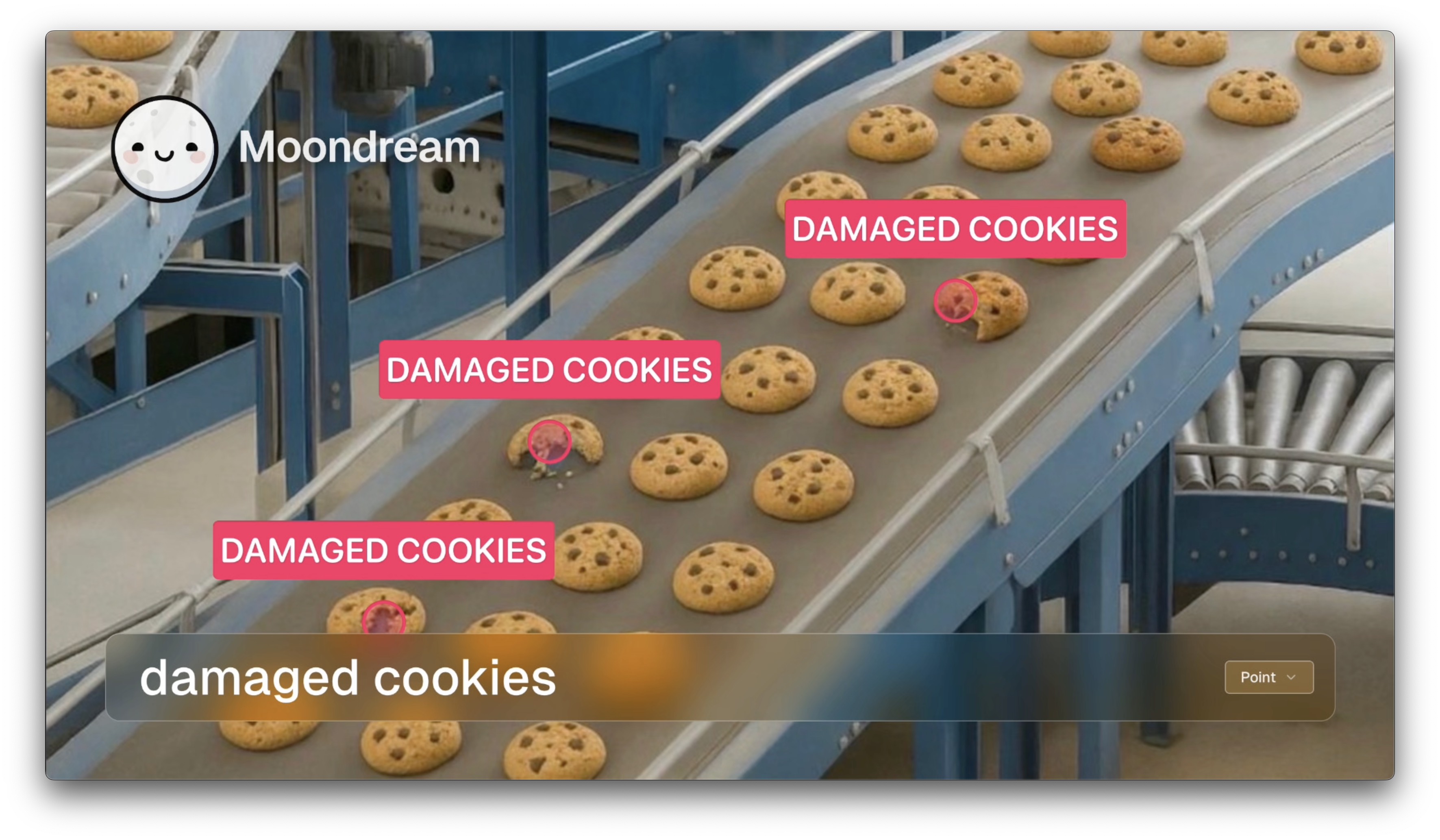

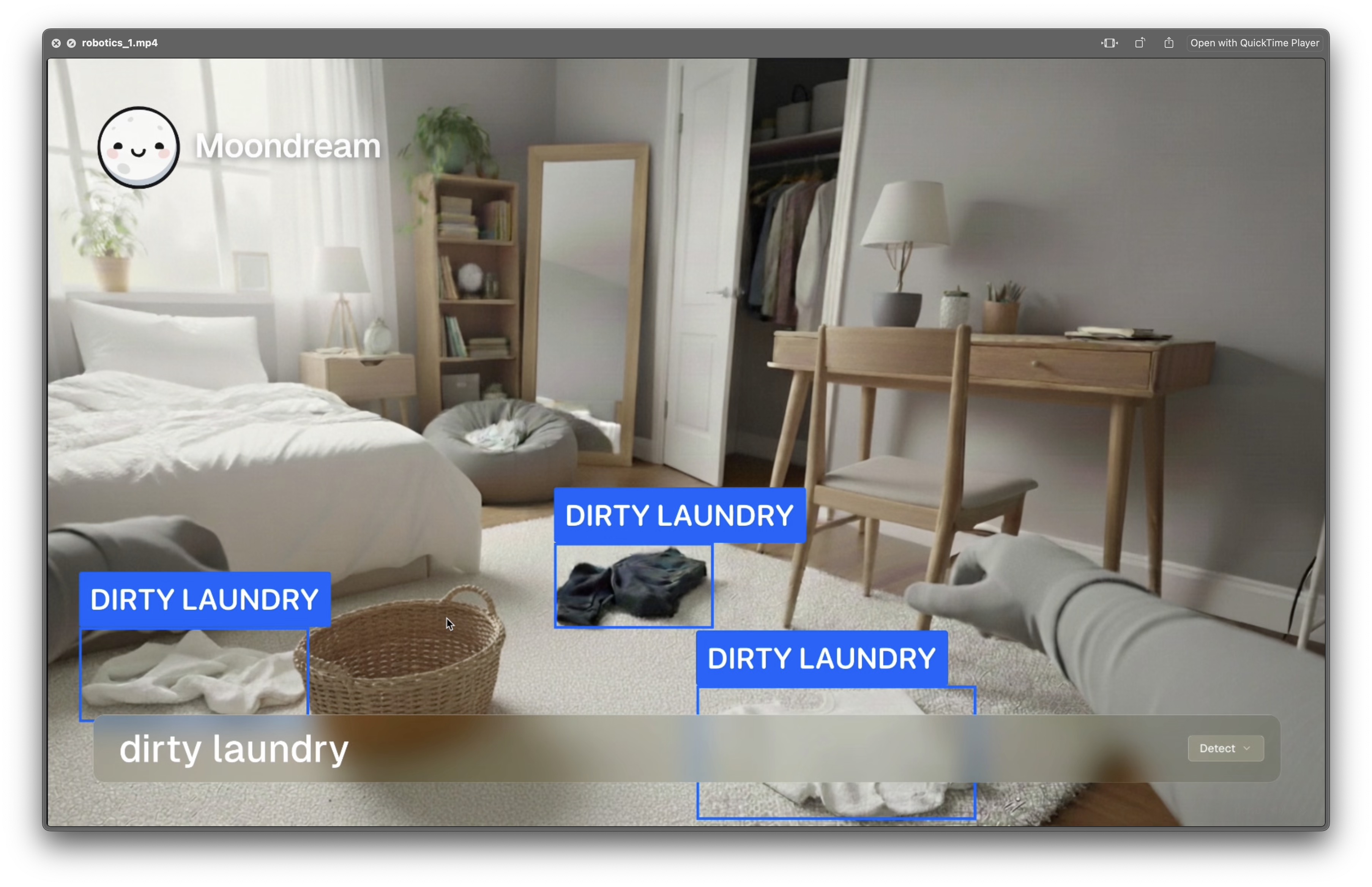

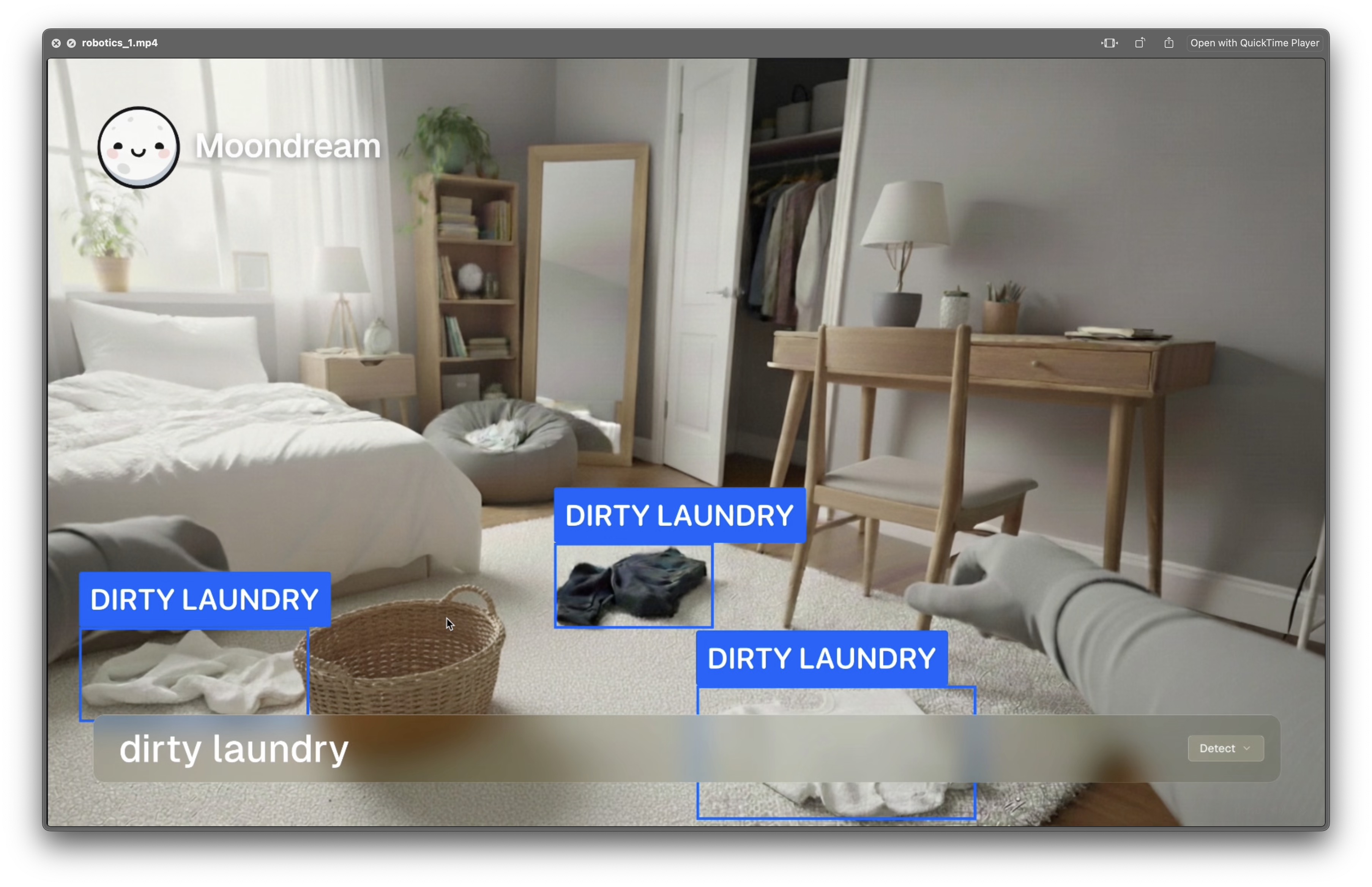

Smart Robotics

Give robots the gift of sight. Natural language prompts like 'Find the red ball' or 'Is the path clear?' enable flexible, adaptive behavior without retraining.

UI Automation & Testing

Break free from brittle selectors. Moondream understands UI elements semantically, 'Locate the Submit button' or 'Is an error displayed?', making test automation resilient and maintainable.

Moondream is trusted by

Get Running in Minutes.

Moondream is open source and you can install and run it anywhere, for free. You can have it running on your computer or in our cloud in a matter of minutes.

- Moondream Station is free

- Works with our Python and Node clients

- Works offline, fully under your control

- CPU or GPU compatible

- Spin up instantly—no downloads or DevOps

- $5 in free monthly credits, no card required

- Predictable pay-as-you-go pricing

- 2 RPS on free tier, scales to 10 RPS or more with paid credits

Trusted by Developers Everywhere.

Used in real-world applications across retail, logistics, healthcare, defense,and more.